The rise of generative AI tools like Microsoft 365 Copilot has rapidly reshaped how modern workplaces function, streamlining document creation, automating repetitive tasks, and enhancing decision-making. But with this technological shift comes a serious question for business leaders: Are we deploying AI responsibly?

Ethical considerations around tools like Copilot aren’t just a matter of compliance. They’re about trust, fairness, transparency, and protecting both employees and organizational data. As AI becomes embedded in workflows, executives, HR leaders, and IT professionals must actively define how Copilot and similar technologies are introduced and governed.

This blog dives into the ethical guardrails, leaders must consider before integrating AI into their workplace.

Why Ethics in AI Matters?

AI systems learn from data. That sounds simple—but in practice, it’s complex and risky. Because data can contain historical biases, systemic inequalities, or simply incomplete perspectives, AI tools can unintentionally magnify these flaws at scale.

According to a 2025 McKinsey report on AI superagency in the workplace, more than 60% of workers already use generative AI tools in some capacity, often without formal training or governance frameworks. The report also points out that organizations must prioritize ethical design, employee education, and role clarity to prevent misuse or dependency. These findings signal that ethics must be baked into deployment strategies from the outset—not addressed as an afterthought.

For leaders, it’s no longer enough to “trust the tech.” Responsible use of AI requires intentional frameworks, user education, and governance systems.

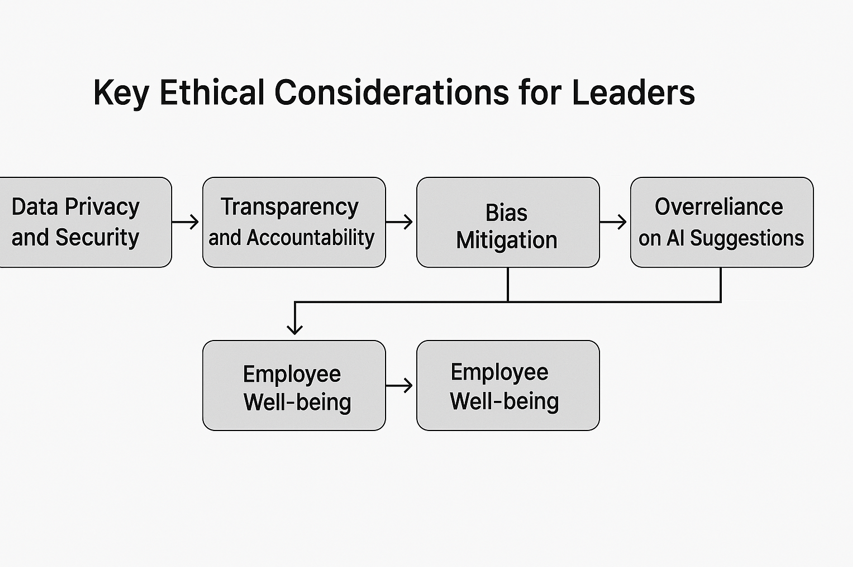

Key Ethical Considerations for Leaders

1. Data Privacy and Security

Microsoft 365 Copilot operates within the organization’s security boundaries, ensuring business content remains within the Microsoft 365 tenant and is not used to train external AI models. However, leaders must ensure appropriate data governance measures are in place to prevent unauthorized access and data breaches.

2. Transparency and Accountability

Transparency in AI decision-making processes is crucial. Organizations should clearly communicate how AI tools like Copilot are used in decision-making, ensuring that employees understand the role of AI in their work processes.

3. Bias Mitigation

AI systems can inadvertently perpetuate existing biases present in training data. Implementing fairness and bias mitigation strategies, such as algorithmic fairness and continuous monitoring, is essential to ensure equitable treatment of all employees.

4. Employee Well-being

AI monitoring tools have been linked to increased stress among employees. Leaders must balance the benefits of AI with the potential impact on employee well-being, ensuring that AI tools are used to support, rather than hinder, the workforce.

5. Overreliance on AI Suggestions

Copilot can suggest summaries, decisions, or insights. But if employees stop thinking critically and start accepting all AI output as truth, judgment errors can creep in. So, business leaders must ensure a culture where AI assists—not replaces—human reasoning.

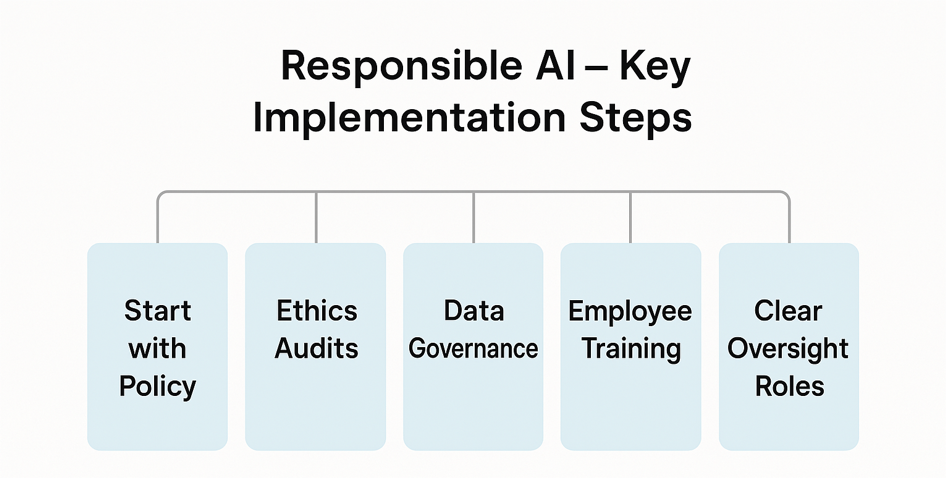

Best Practices for Responsible AI Deployment

Responsible AI isn’t plug-and-play; it requires structured thinking, ongoing assessments, and a multi-disciplinary approach. Here are concrete steps leaders should adopt:

1. Start with Policy, Not Technology

Draft an internal Responsible AI policy. This should include:

- What Copilot can and cannot be used for

- How often content will be reviewed

- How complaints or concerns can be raised

2. Ethics-Based Auditing

Ethics-based auditing is more than just checking code. It involves evaluating how AI systems align with fairness, accountability, and transparency values. Here’s the checklist for ethics audits:

- Are datasets representative and regularly updated?

- Can algorithmic decisions be explained and challenged?

- Have diverse teams been involved in the AI lifecycle?

3. Strong Data Governance

As per industry experts, most ethical AI failures are due to data failures. It’s essential to Implement robust data management that includes:

- Access control for sensitive files

- Retention and deletion schedules

- Real-time auditing and alert systems

4. Employee Engagement and Training

AI ethics isn’t just an IT or compliance issue. It affects how employees interact with their tools and perceive their roles.

“Employees are more likely to accept AI tools if they feel part of the process,” – Harvard Business Review, 2024.

Training programs should not only cover how to use Copilot but also why ethical considerations matter. Employees should understand when human judgment is needed and how to escalate questionable outputs.

5. Define Oversight Roles

Assign clear roles for Copilot oversight. This includes:

- AI Ethics Officers to handle escalations and policy decisions

- IT Managers to track and report usage analytics

- HR Professionals to assess the impact on employee morale and performance

Emerging Trends in AI Ethics

| Trend | What’s Happening |

| Global Regulations | The EU’s AI Act is expected to become a model for AI governance worldwide by late 2025 |

| AI Whistleblower Policies | Tech companies are developing safe channels for reporting unethical AI use |

| Explainability Toolkits | Vendors are introducing new tools to make Copilot and similar AI systems more transparent and auditable. |

| Employee Rights Expansion | HR policies are starting to include AI-related protections, such as “algorithmic decision appeals.” |

Final Thoughts

The integration of Copilot into workplace systems marks a new chapter in human-computer collaboration. But it’s not just about what the tool can do; it’s about what it should do.

Executives need to look beyond functionality and think holistically. Ethical AI requires intentional design, continuous feedback loops, and leadership that values accountability over convenience.

By grounding AI usage in strong governance, inclusive policies, and transparent communication, leaders not only protect their organizations from risk but also earn long-term trust from their employees and stakeholders.

Need Help Navigating Copilot Deployment?

At Dynamics Solution and Technology, we specialize in ethical AI implementations through Microsoft Dynamics 365. From governance frameworks and role-based permissions to employee training and system audits, we help you deploy Copilot responsibly and effectively.

Let’s talk about building a Copilot strategy that fits your business—with ethics baked in.